A Flexible Neural Renderer for Material Visualization

Aakash KT1, Parikshit Sakurikar1, 2, Saurabh Saini1 and P. J. Narayanan1

1IIIT Hyderabad

2DreamVu Inc.

ACM SIGGRAPH Asia 2019, Technical Briefs

Abstract

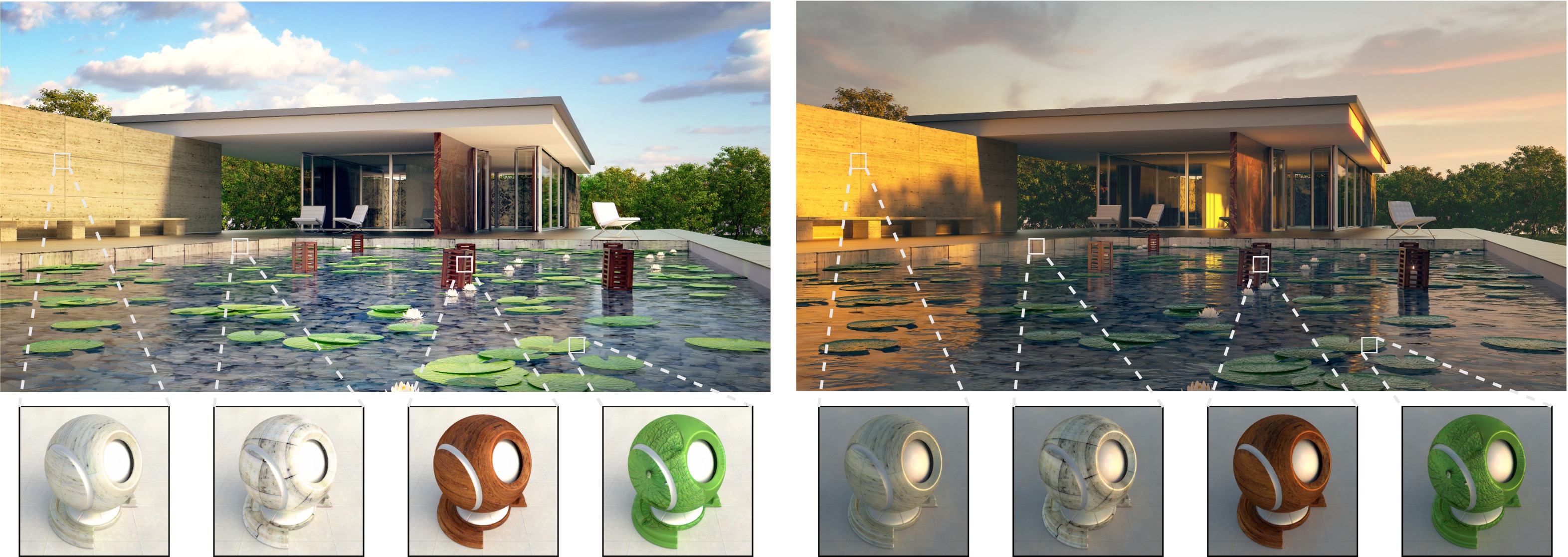

Photo realism in computer generated imagery is crucially dependent on how well an artist is able to recreate real-world materials in the scene. The workflow for material modeling and editing typically involves manual tweaking of material parameters and uses a standard path tracing engine for visual feedback. A lot of time may be spent in iterative selection and rendering of materials at an appropriate quality. In this work, we propose a convolutional neural network that quickly generates high-quality ray traced material visualizations on a shaderball. Our novel architecture allows for control over environment lighting which assists in material selection and also provides the ability to render spatially-varying materials. Comparison with state-of-the-art denoising and neural rendering techniques suggests that our neural renderer performs faster and better. We provide an interactive visualization tool and an extensive dataset to foster further research in this area.

Acknowledgements

We thank the reviewers of our SIGGRAPH Asia 2019 submission for their valuable comments and suggestions.